DATE – 2007- 2009

DISCIPLINE – Science

MEDIUM – Interactive Display

STATUS – Funded by a joint NSERC and Canada Council grant

WEBLINKS

http://imdc.ca/asid/ASID.html

http://www.ryerson.ca/psychology/emotichair/

https://www.newscientist.com/article/dn16738-vibrating-chair-lets-users-rock-to-the-beat/

http://www.ryerson.ca/news/news/Research_News/20090227_Emoti/

http://torontoist.com/2009/02/emoti-chairs_at_clintons/

http://www.torontosun.com/news/torontoandgta/2009/02/26/8536856-sun.html

https://www.thestar.com/life/health_wellness/2008/07/02/emotichair_delivers_good_vibrations_to_deaf.html

Often understood to be diametric opposites, art and technology are two central aspects which permeate my artistic philosophy. Uniquely purveying their thoughts and feelings through creative works, artists are enabled by the multiplicity of choices that technology affords, offering alternative method and mediums. Because the mind is perpetually limited by human physicality (McLuhan 19XX), the translation of thought into tangible physical objects outside of body is, at best, an attempt to creatively express or represent the intended meaning. Working within the limitations of television and film, artists must express their message through the video and audio channels; these individuals shape their message accordingly, fitting their message into this means of dissemination. A problem surfaces, however, when an audience member is unable to access one of these avenues of meaning-making, the deaf or hard of hearing, for example. Although several strategies have been developed to avoid this problem, evident in the widespread adoption of close captioning, those who are deaf or hard of hearing receive a diluted and potentially highly subjective interpretation of non-dialogue sound information such as music and sound effects, as spoken dialogue is prioritized and only so much can be fit within the available caption space and time.

I want to construct a multimedia system that allows people who are deaf and hard of hearing the opportunity to access and experience sound information through alternative means, using visual and tactile media to represent meaning that is more indicative of the film or television content producer’s original goal. Working with the Ryerson human computer interaction team, I want to enable these individuals to feel and see sound information, privileging the auditory experience as a whole rather than focusing solely on information gained from dialogue alone.

My role as the artist in this project will be to determine how different types of sound information should be expressed, based on a variety of factors. Music, for example, ranges in style, mood and intensity while spoken dialogue varies according to the speaker’s intonation, speed, and the words that are emphasized, among other factors. The translation of these different factors into visual and tactile outputs requires my unique perspective, as I am knowledgeable and experienced in morphing my ideas into unconventional physical and multimedia representations.

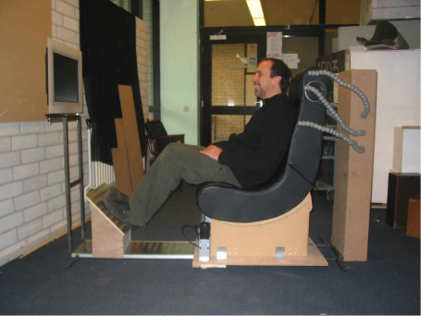

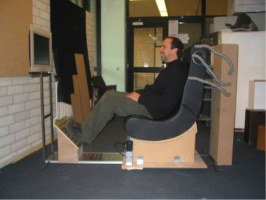

In one of my projects, for example, I wanted to show that while technology can enable communication over distances, something is lost when an individual’s presence is limited to the space and functionality of a computer screen. I created a presence chair that uses a screen at the back of a chair to display the image of the remote person in a video conference. This person then looks like she is sitting in a chair. She can also swivel the chair from the remote location to show that she is directing her attention to someone else in the physical room. Using similar devices, she then has some of the important physical movements that are commonly used in meetings or public speaking events (such as turning to see a speaker). This project was part of the sentient creatures lecture series where Jaron Lanier, renown digital media artist and computer scientist, presented a “guest” lecture from a remote location to an audience of sixty. Jaron was able to interact with his audience, for, when he moved, the chair mimicked a variety of his movements, allowing him to have a physical presence within the room (see http://connectmedia.waag.org/media/SentientCreatures/ jaronlanier.mov).

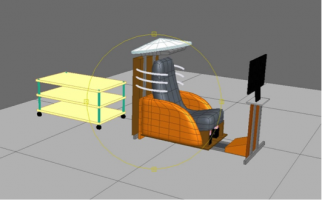

I have made several preliminary sketches (see Figure XX) to illustrate my initial thoughts on the possible functions and aesthetic look of the device that we are constructing. I am eager to develop a relationship with the Ryerson team, for I know that this project has the ability to cater to a community that has long been marginalized by mainstream entertainment. By providing alternative representations of sound information for the deaf and hard of hearing, I seek to create an entertainment experience that is as rich and meaningful for deaf and hard of hearing individuals as the audio visual experience that most hearing individuals are able to enjoy.

For the most part, my work as an artist has centered on the interrelation of art and media. In creating multimedia installations, I challenge my audiences to embrace that which is created through the interplay of computers, art and media, acknowledging their preconceptions in hopes. For example, my art project, “Sentor PoBot Goes to Washington” used an early video conferencing system built into a wireless robot. The purpose of this was to allow poets and musicians to communicate with people on the streets in front of the White House in Washington DC from various remote locations around the world. Artists were able to express their opinions on a “virtual soap box” while audience members on the street experienced a different physical and audio-visual form of the artist. Audience members were able to see how art and music, with the assistance of technology, could give an artist presence within a remote location which he would have been unable to access otherwise.

Working with Jeff Mann and Michelle Teran, I created the “Live Form Telekinetics Projects” which occurred at both InterAccess and Toronto and the WAAG in Amsterdam simultaneously. We worked with eight artists to create a telepresence dinner linked with various telepresence scultural objects. Physical objects were duplicated on each side and when manipulated in real space an equivalent manipulation occurred on the side. For example, if someone “clinked” the wineglass in Toronto, a robotic mechanism clinked the equivalent wineglass in Amsterdam. A telepresence table was built where half the table contained physical objects and the other half contained a video projection from the remote location. If a person in the remote location placed their hand on the table, it would appear as an actual size hand on the virtual portion of the table at the

FULL CITATION (TITLE/REFERENCE) REFEREED

JOURNAL ARTICLES CONFERENCE

PRESENTATION/POSTER OTHER (INCLUDING TECHNICAL REPORTS, NON-REFEREED

ARTICLES, ETC.)

Accepted/Published

Karam, M. and Russo, F. and Fels, D. (2009). Designing the Model Human Cochlea: An Ambient crossmodal audio-tactile display. (2009) IEEE Transactions on haptics: Special issue on ambient haptic systems. 2(3). 160-169. X

Branje, C. Maksimouski, M., Karam, M., Russo, R. & Fels, D.I. Vibrotactile display of music on the human back. (in press). Proceedings of The Third International Conferences on Advances in Computer-Human Interactions (ACHI 2010), Barcelona.

X

Karam, M., Branje, C., Russo, F., Fels, D.I. (accepted). The EmotiChair – An Interactive Crossmodal tactile music exhibit. ACM CHI, Atlanta, April 2010. X

Branje, C.J., Karam, M., Russo, F., Fels, D.I. (2009). Enhancing entertainment through a multimodal chair interface. IEEE Symposium on Human Factors and Ergonomics. Toronto. X

Karam, M. and Nespoli, G. and Russo, F. and Fels, D.I. (2009). Modelling Perceptual Elements of Music in a Vibrotactile Display for Deaf Users: A Field Study. In Proceedings of The Second International Conferences on Advances in Computer-Human Interactions (ACHI 2009). Cancun.

X

Branje, C. and Maksimowski, M., Nespoli, G., Karam, M., Fels, D. I. & Russo, F. (2009). Development and validation of a sensory-substitution technology for music. 2009 Conference of the Canadian Acoustical Association. Niagara on the Lake. X

Karam, M., Russo, F., Branje, C., Price, E., & Fels, D. I. (2008). Towards a model human cochlea: sensory substitution for crossmodal audio-tactile displays. In Proceedings of Graphics Iinterface. Windsor. pp. 267-274.

X

Karam, M. & Fels D.I. (2008). Designing a Model Human Cochlea: Issues and Challenges in Crossmodal Audio to Touch Displays. Invited Paper: Workshop on Haptic in Ambient Systems. Quebec City. X

Feel the Music: An accessible concert for deaf or hard of hearing audiences. Live music concert. Clinton’s Tavern. Toronto. (2009) X

Feel the Music: Concert at Ontario Science Centre. Three live bands and vibrochair. (2009). X

Emoti-chair: 5-month installation exhibit at Ontario Science Centre. Weston Family Ideas Centre. (2009). X