DATE 2017

DISCIPLINE Science

MEDIUM Telepresence and tactile interface for autistic children

STATUS Research project started Jan. 2017 at Ryerson University and University College Dublin with FUNDING: Ontario Centre of Excellence

PhD Project Proposal

PhD Project Proposal

Graham Thomas Smith

Thematic PhD in Inclusive Design and Creative Technology Innovation

Cognate Fields: Inclusive Design, Robotics, Telematics, Autism, Education

Proposed Supervisory/DSP Team as discussed and agreed with team members:

Principal Supervisor: Prof Lizbeth Goodman, MME: Creative Technology Innovation/Inclusive Design

Co-Supervisor: Dr Brian MacNamee, Insight/Computer Science

Doctoral Studies Panel: Chair- Dr Suzanne Guerin, Psychology/Disability Studies, Prof Goodman, Dr MacNamee

PhD Project Title – WEBMOTI – a multimodal computer interface to help autistic children mature their sensory systems to allow them the ability to balance their cognitive and emotional development.

Main Field of Study – Inclusive Design

Research Question – Can the use of a multimodal computer interface that includes both videoconferencing as well as tactile feedback allow young people dealing with the issue of autism the ability to mature their sensory systems to balance their cognitive and emotional development.

Webmoti

PhD Project Proposal

Graham Thomas Smith

Thematic PhD in Inclusive Design and Creative Technology Innovation

Cognate Fields: Inclusive Design, Robotics, Telematics, Autism, Education

Proposed Supervisory/DSP Team as discussed and agreed with team members:

Principal Supervisor: Prof Lizbeth Goodman, MME: Creative Technology Innovation/Inclusive Design

Co-Supervisor: Dr Brian MacNamee, Insight/Computer Science

Doctoral Studies Panel: Chair- Dr Suzanne Guerin, Psychology/Disability Studies, Prof Goodman, Dr MacNamee

PhD Project Title – WEBMOTI – a multimodal computer interface to help autistic children mature their sensory systems to allow them the ability to balance their cognitive and emotional development.

Main Field of Study – Inclusive Design

Research Question – Can the use of a multimodal computer interface that includes both videoconferencing as well as tactile feedback allow young people dealing with the issue of autism the ability to mature their sensory systems to balance their cognitive and emotional development.

METHODOLOGY

Children with autism can face on-going and lifelong challenges with social, cultural and well-being issues. They are often ostracized by peers, mistreated by an education system with limited resources and incomplete or ineffective tools, misunderstood and excluded. Not only does this potentially contravene the Canadian Charter of Rights and Freedoms but also it can reduce or eliminate an individual’s ability to make cultural, intellectual, work and family contributions as a citizen in society which can have a serious and direct impact on that individual’s educational achievement, health and well-being that can tax the educational and health care systems.

The WebMoti is a tool to observe with and without participation the way in which peers in the classroom behave and socialize using the powerful camera that enables to zoom in the classroom. By being the “coolest kid” in the class (inside a computer) and being able to observe without having to participate in person students with ASC are less prone to ostracism and bullying which can then translate into improved self-esteem and a corresponding reduction in absenteeism, depression and related health issues. The WebMoti can make students aware of their hearing and tactile senses, and manipulate these to attend lessons without being overwhelmed. Manipulating these thresholds may also stimulate sensory awareness and self-actualization [24, 29].

The main qualitative outcomes of this project involve improved participation in school that may also translate into improved mental health for children with ASC because of support for the sensory maturation process and the reduction of the impact of bullying and ostracization on the mental health of these children. Using a technological intervention that combines multi-sensory output system (tactile, auditory and visual modalities are available) with a real-time, connected representative (Webchair), children with ASC can control and mediate their sensory environment and their participation in the social setting of school. The main quantitative outcomes will be improved attendance, participation in school, and perhaps academic achievement scores.

The newest research shows that autism spectrum conditions (ASC) are not a defect but rather a difference [9]. According to the theory of the Socioscheme with the MAS1P (Mental Age Spectrum within 1 Person), autism is about accelerated cognitive and delayed social and physical maturation of the central nervous system [10, 13].

The number of children diagnosed with ASC is about 1 in 94 in Canada [26] and 1 in 100 in Europe [10]. A child with autism is often ashamed, feels suppressed, is angry, and amazed not to be heard. They are fiercely and regularly bullied and have an acute sense of failure. Children with autism tend to drop out of school for sheer fear of peers, of the educational system and run a high risk for depression and lower quality of life [29, 30].

Children with autism often have issues with sensory integration [28, 16], and it has been suggested that this results from a lack of synaptic pruning in the brain [5, 14]. For example, in terms auditory sensing, the foreground sounds are strengthened and the background sounds are pushed to the background. When maturation lags, autistic individuals hear everything as it presents itself, which produces a disturbing and painful sound situation. At school, concentration is almost impossible and children are exhausted, which potentially has a serious impact on the child’s health and wellbeing. Allowing children to explore and play with sensory information may not only help ASC children better understand their sensory needs and self-advocate on behalf of necessary accommodations [6, 12, 24].

ASC children have significant sensory processing challenges versus typically developing peers [20]. Educational programs rarely take this into account when working to accommodate ASC children, and ABA/IBI therapeutic approach currently widely used in Ontario does little to address cognitive overload. Our WebMoti approach recognizes the need for ASC children to have opportunities to reduce cognitive/sensory overload through remote presence and at the same time use this remote presence to fine tune the level of interaction and engagement they have with their peers. The potential here is to support the creation of conditions whereby the child can gradually increase the amount of face-to-face interaction with peers through guided experience in full knowledge of the opportunity to step back into our digitally mediated environment. At the same time, WebMoti allows the child to control and fine tune the kind and amount of somatosensory stimulation necessary for homeostatic balance. Helping a child modulate sensory information based on their own personal needs not only has the potential for the development a greater understanding of their sensory needs and interests [17], but also as a clear influence on their behaviour and can lead to greater self-regulation [9].

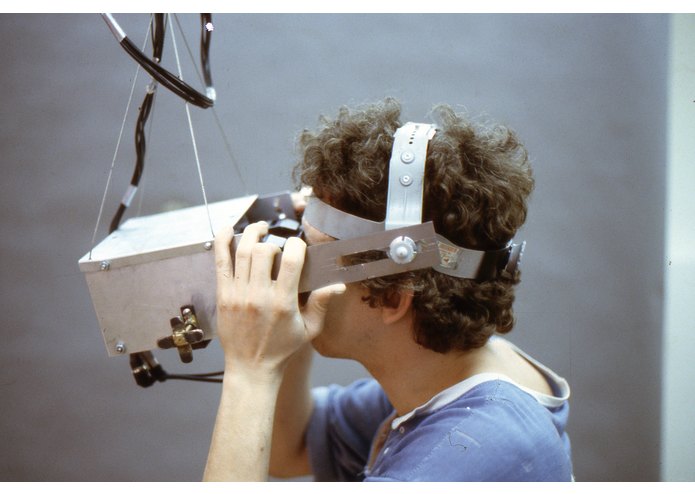

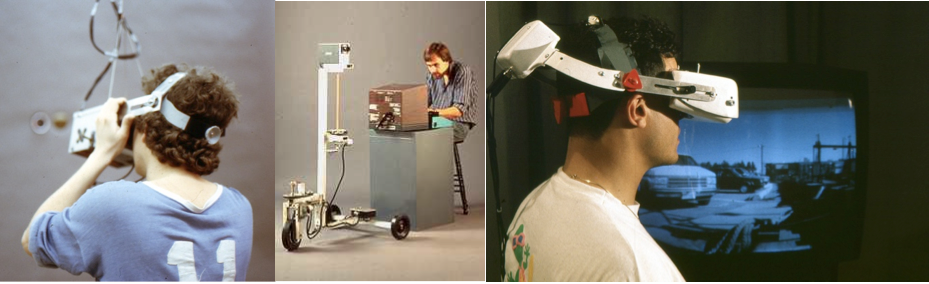

Sensory substitution, such as vibration or visualisation replacing sound and vice-versa (e.g., [1, 15], offers opportunities to mediate sensory stimulation and allow an individual to obtain the information using alternative means [2, 3, 4].The Emoti-chair is a sensory substitution system so that when combined with the Webchair students with ASC can use the sensory modality that is best suited to their informational needs when interacting with others at a distance and in the safety of their own environment. While both systems have been used independently and be shown to be effective in some domains (e.g., access to sound-based music for the Emoti-chair and remote connectivity for hospital-bound children for the Webchair), there is no system known to the authors that provides multi-sensory input/output across a distance, specifically oriented for children with ASC who often benefit from alternative stimuli. In this project, we will then be able to provide such a system by integrating the Webchair and Emoti-chair as well as demonstrate its effectiveness through formative and summative evaluations as described in Section 4.

Webchair in the Netherlands is the only company in the world using videoconferencing technology to give homebound autistic students the chance to integrate into conventional school classrooms. To date over 20 students have been re-integrated back into their classes using the technology and pedagogical program developed by the company that gives control to the autistic child over how and when they attend class. When the students are to stressed to attend class physically, for whatever reason, they can come into class via the Webchair from home or one of the dedicated distant learning centers set up as alternatives to remaining at home in isolation. The students can even attend class from a “safe room” set up in the student’s school as a 3rd possibility as sometimes they need to physically leave the classroom but still want to continue to be in class remotely.

This approach has been very successful as the students can control the amount of sensory input they are exposed to in relation to their level of stress at any given time. The students are encouraged to sometimes physically be in their classrooms but this decision is left up to them. It has been observed that the more the children actually attend class the more they connect with other students and begin to understand the complex social environment of the classroom.

The knowledge of how to connect and re-integrate the autistic students as well as the dedicated piece of technological equipment (the Webchair) are the 2 things that will be transferred to Ontario. Webchair works closely with Dr. Martine Delfos who is our leading research professional in the area of autism and this is also knowledge that will be transferred to the Ontario team at Ryerson University.

Overall Objective

The main objective of this project is to develop and evaluate a multi-media, multi-sensory connection system, called Webmoti, which supports the social and educational needs of children and teenagers with autism. For those children who have issues with hearing, Webmoti will be evaluated with a variety of audio controls (e.g., stop, adjust volume levels) and sensory substitution techniques for sound alternatives (e.g., vibrotactile or visual representations) to suit different hearing needs.

The goal is to achieve a new way allowing students with ASC to participate in school that provides them with control and agency. However, it is important that this process fits within a school setting given the need for technology, particularly video technology, to be present in the classroom. The Accessibility for Ontarians with Disability Act and the Intersection of Disability, Achievement and Equity document of the Toronto District School Board [8] provides for accessibility to be embraced by Ontario school boards and thus this project is consistent with these mandates. The school systems in the Netherlands have begun to incorporate an earlier version of the WebMoti system, called the WebChair, with hundreds being installed over the past three years. However, consultation and pilot studies are required before the WebMoti system can be considered as a wide-spread solution for children with ASC in Ontario as there are implications for staff training and understanding some of the issues that can arise (e.g., occasionally sound can become distorted).

Objective 1: Prototype development

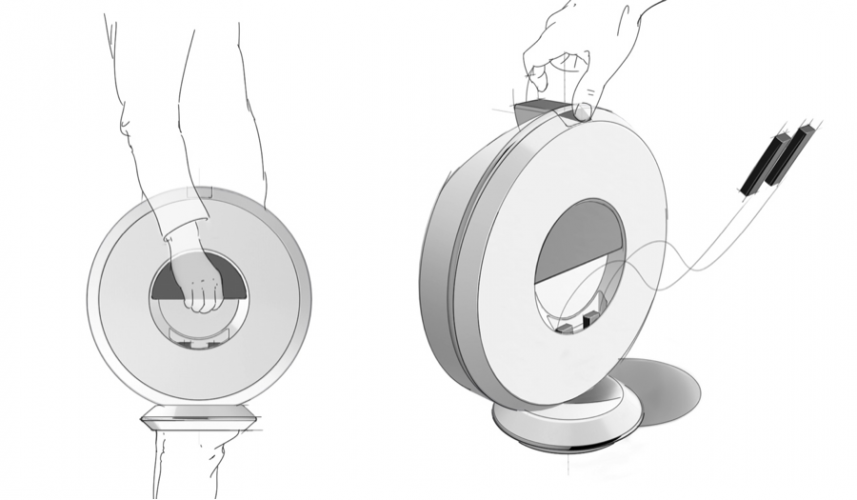

The first objective will be to combine the Webchair and Emoti-chair technologies into one prototype system. Combining the two systems may also involve adding other sensory substitution techniques such as smell according to research carried out by [18]. However, decisions regarding specific sensory preferences and technology options will be made in consultation with users. Part of Nolan’s contribution to the research will focus on the further development and testing of a previously developed wearable prototype, developed out of a previous research project [18] that will provide real-time telemetry related to the stress/anxiety levels of participants.

A formative user-study method will be used to evaluate early iterations of the system with 4 to 5 participant users with ASC to evaluate usability elements (ease of learning and use, and satisfaction). The objective of these evaluations are to engage users in finding interaction problems and problem solving technological solutions for these identified problems during prototyping. Once these participant users are satisfied that the system does not have major interaction issues, a longitudinal evaluation of the system will occur with participants from the Toronto area for at least one school term to assess the long-term efficacy of the system. Participants will use WebMoti to attend school when they are unable to attend physically (which can vary from infrequently to often). Each session with WebMoti will be video/audio recorded. In addition, all stakeholders including the student him/herself, teachers, parents, and healthcare providers will be asked to complete a pre-study survey followed by a bi-weekly surveys to track change and difference. Once the study term is complete, all stakeholders will be asked to either complete a summary survey or participate in one-on-one interviews. The purpose of this last step is to gather impressions and attitudes towards the process and technology, likes/dislikes, and any issues that should be addressed in future work. (ease of learning and use, and satisfaction) [27]. The 10-item System Usability Scale [7] will be used. In addition, the attitude toward well-being instrument Life Satisfaction Matrix [19] and the Sensory Behaviour Scale [11] will be evaluated for their efficacy in this context. The objective of these evaluations is to gather user’s reactions to and performance with different versions of the prototype with the aim of finding user interaction problems and rectifying them.

Data from the formative user studies will be analysed using qualitative methods such as thematic analysis [22] as there is insufficient power to use more than descriptive statistics. The results from the analysis will be fed back into the development process.

Objective 2: Summative evaluation

Once the research team is satisfied that the system does not have major interaction issues, a longitudinal summative evaluation will occur. For this evaluation, systems will be placed with 16 participants in Ontario for at least one school term to assess the long-term efficacy of the system.

Participants will use WebMoti to attend school when they are unable to attend physically (which can vary from infrequently to often). Each session with WebMoti will be video/audio recorded. In addition, all stakeholders including the student him/herself, teachers, parents, and healthcare providers will be asked to complete a pre- study survey followed by a bi-weekly surveys to track change and difference. Once the study term is complete, all stakeholders will be asked to either complete a summary survey or participate in one-on-one interviews depending on availability. The purpose of this last step is to gather impressions and attitudes towards the process and technology, likes/dislikes, and any issues that should be addressed in future work. We anticipate this phase to last 18 months corresponding with school terms (e.g., not including summer months).

The quantitative data (responses to forced-choice questions) will be analyzed using repeated measures ANOVA and correlation to examine change over time. Qualitative data will be analyzed using thematic analyses. The results will then be used to inform a framework of sensory substitution techniques, ASC and health and wellbeing outcomes based on the instruments described in Objective 1.

REFERENCES

[1] Abboud, S., Hanassy, S., Levy-Tzedek, S., Maidenbaum, S., & Amedi, A. (2014). EyeMusic: Introducing a “visual” colorful experience for the blind using auditory sensory substitution. Restorative neurology and neuroscience, 32(2), 247-257.

[2] Attwood, T. (2006). The complete guide to Asperger’s syndrome. Jessica Kingsley Publishers.

[3] Baranek, G. T. (2002). Efficacy of sensory and motor interventions for children with autism. Journal of autism and developmental disorders, 32(5), 397-422.

[4] Baranek, G. T., David, F. J., Poe, M. D., Stone, W. L., & Watson, L. R. (2006). Sensory Experiences Questionnaire: Discriminating sensory features in young children with autism, developmental delays, and typical development. Journal of Child Psychology and Psychiatry, 47(6), 591-601

[5] Bourgeron, T. (2009). A synaptic trek to autism. Current opinion in neurobiology, 19(2), 231- 234.

[6] Broderick, A. A., and A. Ne’eman. 2008. Autism as metaphor: Narrative and counter-narrative. International Journal of Inclusive Education 12 (5–6): 459–576.

[7] Brooke, J. (1996). SUS-A quick and dirty usability scale. Usability evaluation in industry, 189(194), 4-7.

[8] Brown, R. S. & Parekh, G. (2013). The intersection of disability, achievement, and equity: A system review of special education in the TDSB (Research Report No. 12-13-12). Toronto, Ontario, Canada: Toronto District School Board.

[9] Case-Smith, J., Weaver, L. L., & Fristad, M. A. (2014). A systematic review of sensory processing interventions for children with autism spectrum disorders. Autism, 1362361313517762.

[10] Delfos, M.F. (2016). Wondering about the world. About Autism Spectrum Conditions. Amsterdam: SWP. [8] Elsabbagh, M., Divan, G., Koh, Y. J., Kim, Y. S., Kauchali, S., Marcín, C., … & Fombonne, E. (2012). Global prevalence of autism and other pervasive developmental disorders. Autism Research, 5(3), 160-179.

[11] Harrison, J., & Hare, D. J. (2004). Brief report: Assessment of sensory abnormalities in people with autistic spectrum disorders. Journal of Autism and Developmental Disorders, 34(6), 727-730. doi:10.1007/s10803-004-5293-z.

[12] Harley, D., McBride, M., Chu, J. H., Kwan, J., Nolan, J., & Mazalek, A. (2016). Sensing context: Reflexive design principles for intersensory museum interactions. MW2016: Museums and the Web 2016.

[13] Hong, H., Kim, J. G., Abowd, G. D., & Arriaga, R. I. (2012, February). Designing a social network to support the independence of young adults with autism. In Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work (pp. 627-636). ACM.

[14] Johnson, M. H. (2005). Sensitive periods in functional brain development: Problems and prospects. Developmental psychobiology, 46(3), 287-292.

[15] Karam, M., Branje, C., Russo, F., Fels, D.I. (2010). Vibrotactile display of music on the human back. ACHI2010. Barcelona. Pp. 154-159.

[16] Kern, J. K., Trivedi, M. H., Grannemann, B. D., Garver, C. R., Johnson, D. G., Andrews, A. A.,… & Schroeder, J. L. (2007). Sensory correlations in autism. Autism, 11(2), 123-134.

[17] Kern, J. K., Trivedi, M. H., Garver, C. R., Grannemann, B. D., Andrews, A. A., Savla, J. S., & Schroeder, J. L. (2006). The pattern of sensory processing abnormalities in autism. Autism, 10(5), 480-494.

[18] Koller, D., McPherson, A., Lockwood, I., Blain-Moraes, S., & Nolan, J. (Submitted). The Impact of Snoezelen in Pediatric Complex Continuing Care: A Pilot Study. Journal of Pediatric Rehabilitation Medicine

[19] Lyons, G. (2005), The Life Satisfaction Matrix: an instrument and procedure for assessing the subjective quality of life of individuals with profound multiple disabilities. Journal of Intellectual Disability Research, 49: 766–769. doi: 10.1111/j.1365-2788.2005.00748.x

[20] Marco, E. J., Hinkley, L. B., Hill, S. S., & Nagarajan, S. S. (2011). Sensory processing in autism: a review of neurophysiologic findings. Pediatric Research, 69, 48R-54R.

[21] McBride, M., Harley, D., Mazalek, A., & Nolan, J. (2016). Beyond Vapourware: Considerations for Meaningful Design with Smell. CHI’16, Extended Abstracts on Human Factors in Computing Systems. ACM. San Jose, U.S.

[22] Miles, M.B., Huberman, M.A., Saldana, J. (2014). Qualitative Data Analysis. Sage Publishing: London

[23] Nielsen, J. (1993). “Iterative User Interface Design”. IEEE Computer vol.26 no.11 pp 32-41.

[24] Nolan, J., McBride, M., & Harley, D. (2016; draft). Making sense of my choices: an autistic selfadvocacy handbook. RE/Lab working paper series.

[25] Oesterwalder, A. (2016). Business Model Canvas Tool. Retrieved Feb. 22, 2016 from

http://businessmodelgeneration.com/canvas/bmc.

[26] Ouellette-Kuntz, H., Coo, H., Lam, M., Breitenbach, M. M., Hennessey, P. E., Jackman, P. D.,… & Chung, A. M. (2014). The changing prevalence of autism in three regions of Canada. Journal of autism and developmental disorders, 44(1), 120-136.

[27] Rogers, Y, Sharp, H., Preece, J. (2011). Interaction Design: beyond human-computer interaction. Wiley:

Toronto.

[28] Stoddart, K. P. (2005). Children, youth and adults with Asperger syndrome: Integrating multiple perspectives. Jessica Kingsley Publishers.

[29] Strang, J. F., Kenworthy, L., Daniolos, P., Case, L., Wills, M. C., Martin, A., & Wallace, G. L. (2012).Depression and anxiety symptoms in children and adolescents with autism spectrum disorders without intellectual disability. Research in Autism Spectrum Disorders, 6(1), 406- 412.

[30] van Heijst, B. F., & Geurts, H. M. (2014). Quality of life in autism across the lifespan: A meta- analysis. Autism, 136-153.

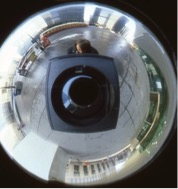

The world exists without the construct of a frame as the spherical 360 by 180 degree bubble of all possible perspectives that defines a point in space is in essence “borderless” as it is the constraint of human vision which limits our view to only a portion of this “sphere in space” that defines a frame. As humans we look all around us over time and scan our environment to build up an understanding of our environment and view the world as a series of “frames” that allows us to build up an understand the world around us and navigate through it. It is this element of the human experience, the limits to our visual construct and the integration of time into our perceptual understanding of the world, that lies at the heart of HELIX as it is a piece which expands the human perception of panoramic space.

The world exists without the construct of a frame as the spherical 360 by 180 degree bubble of all possible perspectives that defines a point in space is in essence “borderless” as it is the constraint of human vision which limits our view to only a portion of this “sphere in space” that defines a frame. As humans we look all around us over time and scan our environment to build up an understanding of our environment and view the world as a series of “frames” that allows us to build up an understand the world around us and navigate through it. It is this element of the human experience, the limits to our visual construct and the integration of time into our perceptual understanding of the world, that lies at the heart of HELIX as it is a piece which expands the human perception of panoramic space.